Microservices and programming languages

Microservices can be built using most modern languages. Suggesting any single language is best for building services undermines one of the key value propositions of the architecture: the freedom and flexibility to select the best technology for the job. A quick search for the best languages for building microservices yields a wide range of potential candidates. However, when looking at the intersection of candidate lists, the top three generally include Java, NodeJS, and Go. In this article, we will be focusing on Java.Microservices and Java

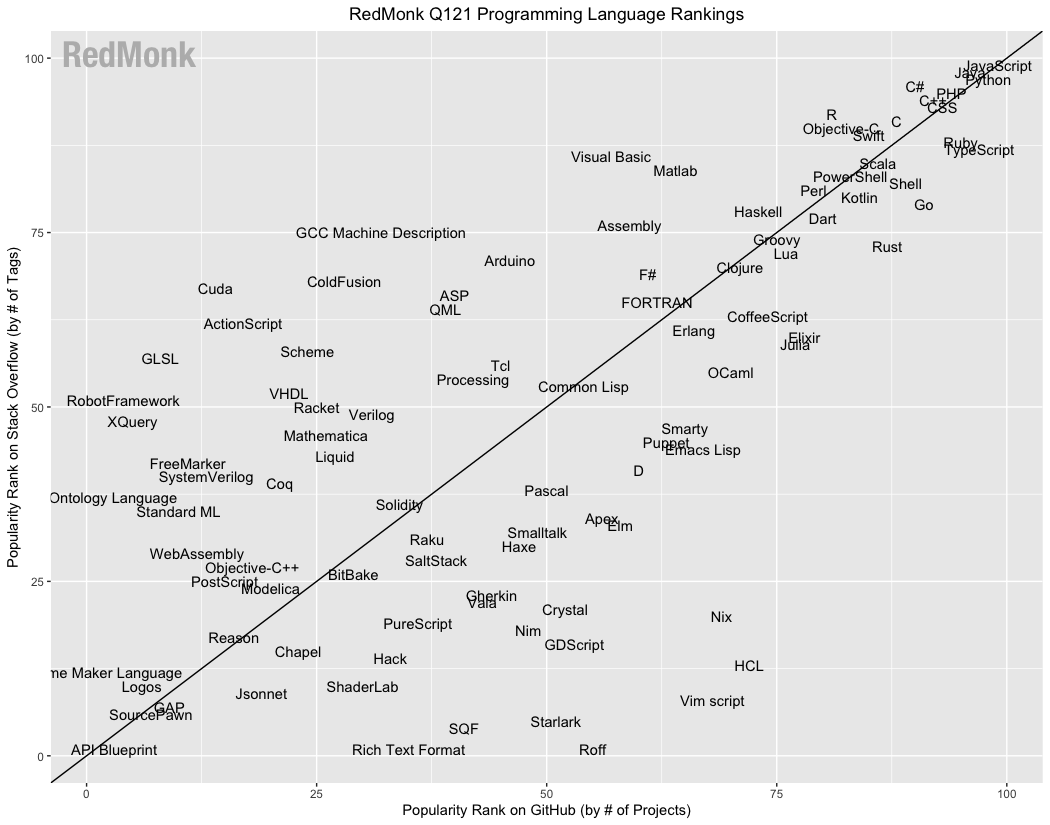

Java has been a popular programming language for a long time. A visit to the Tiobe index or Redmonk index illustrates that, as of this writing, Java has held one of the top 3 positions since 2001.

Java microservice support

From its initial release, Java has included networking support. This factis not surprising considering the corporate motto of Sun Microsystems, the company that originally created Java was:

The Network is the Computer.

Distributed programming is in Java's DNA. While language support for networking makes microservices possible, it is painfully low-level. Fortunately, with Java, we have a host of microservice frameworks to mitigate our suffering. These include Dropwizard, Vert.x, Micronaut, Helidon, Quarkus, and of course, the leading Java microservice framework, Spring Boot.

The problem with java microservices

Before we get started, we must address several valid concerns often raised when discussing Java microservices. While Java has a wealth of support for building microservices, it suffers from a couple of critical issues, specifically: longer startup time and larger memory footprint. These issues arise from the use of the Java Virtual Machine (JVM). These issues are not exclusive to Java. Any language running in the JVM (e.g., Scala , Kotlin , Clojure , Groovy , JRuby, etc.) is also affected.Service Startup Time

Before a Java microservice can begin executing, its host JVM must first launch and initialize itself. The time it takes for the JVM itself is an application that runs the Java application. Before the service can even be loaded, the JVM must first be started. The time it takes for the JVM to start determines the absolute minimum startup time for any Java application. Once initialized, the JVM must perform quite a bit of work under the covers to launch the service's bytecode. At a high level, the JVM performs three primary tasks when launching an application: loading, linking, and initialization.loading

For dynamically loaded languages like Java,a portion of the application code is loaded at startup, with additional code asynchronously loaded as needed. The JVM specification defines loading as:

Loading is the process of finding the binary representation of a class or interface type with a particular name and creating a class or interface from that binary representation.

The compiled bytecode for every class and interface that comprises the application must be retrieved from its jar file container and loaded into the JVM's memory. To accomplish this, Java depends on two types of classloaders: a Bootstrap ClassLoader, which is built into the JVM and has its loading policy defined by the JVM Specification, and User-Defined ClassLoaders which allow application developers to designate a custom loading policy. All classes loaded by the JVM must be loaded by one of these types of loaders.

Each application class and interface, as well as each of its transitive dependencies, must be loaded. The aggregate load time is then added to the JVM startup time to calculate the overall startup time. The complexity of the microservice measured by the total number of classes and interfaces loaded directly impacts the startup time.

linking

The next process the JVM must perform is linking. The JVM specification describes linking as:

Linking a class or interface involves

verifying and

preparing that class or interface, its direct superclass, its direct superinterfaces, and its element type (if it is an array type), if necessary.

Resolution of symbolic references in the class or interface is an optional part of linking.

Verfication

Verification ensures each class or interface conforms to the structural requirements of the JVM. Additionally, verification may require additional classes and interfaces to be loaded. During verification, the JVM will ensure that:- There are no uninitialized variables.

- No access rules for private data and methods are violated.

- All method calls match the object reference.

- There are no operand stack overflows or underflows.

- All local variable uses and stores are valid.

- All JVM instruction arguments are of valid types.

- No final classes are subclassed and that no final methods are overridden.

- All field references and method references have valid names, valid classes, and a valid type descriptor.

-

Preparation

Preparation is the process of creating and initializing the static fields for a class or interface to its default values.

-

Resolution

For various JVM instructions that make symbolic references to the JVM's run-time constant pool (e.g., newarray, checkcast, getfield, getstatic, instanceof, invokedynamic, invokeinterface, invokespecial, invokestatic, invokevirtual, ldc, ldc_w, multianewarray, new, putfield, and putstatic ), the linking process requires an additional step. This resolution step dynamically determines concrete values from the symbolic references in the run-time constant pool. - Jdeps is a class and module dependency analyzer that identifies the classes and modules required for a given application.

- jlink allows us to build an optimized Java runtime image to include only those classes and modules need by the application.

- native-image.properties - This file is used to configure the native image builder's native-image command-line arguments.

- reflect-config.json - Native image has partial support for reflection. However, to take advantage of this, we may need to provide the builder with additional metadata for those program elements. The reflection-config.json file provides the configuration information. During the build, the native image builder performs a static analysis of the application and attempts to automatically detect calls to the Reflection API. In situtations where the builder is unable to automatically detect reflection calls, they must be manually added to this file.

- proxy-config.json - Because Java Dynamic Proxies can also be detected autmatically, we can also specify the dynamic proxy classes be generated by the native image builder manually by editing this configuration file.

- resource-config.json - Application resources on the classpath are not automatically added to the native image by default. Resources called by Class.getResource(), Class.getResourceAsStream() or similar classloader methods must be explicitly configured in the resource-config.json file.

- jni-config.json - GraalVM native image supports JNI reflection, Java to Native method calls, Native to Java method calls, object creation through configuration.

Here again, we see that the JVM must perform a non-trivial amount of work to verify, prepare, and potentially resolve every application class loaded. The sum of these operations is added to the service's total startup time.

initialization

Now that we have loaded and linked our classes, we come to the last step: Initialization.

Initialization of a class or interface consists of executing the class or interface initialization method <h;clinit> (§2.9.2).

Before we can start executing the application, each class's member variables must be initialized, and each ancestral super-class. The JVM must traverse each class's hierarchy and initialize any uninitialized classes. Here we see another impact on startup time.

As we've seen, each phase requires a finite amount of time. The aggregate time needed by the JVM delays the actual start of the service. For services that may start and stop frequently, this may introduce unacceptable performance delays and will incur additional resource costs for an as-yet responsive service. While trivial for a single service, the scalar cost when deploying hundreds or thousands of duplicate services may be non-trivial.

Service memory footprint

In addition to the impact on startup time, the JVM requires a considerable amount of memory even before it has loaded the service code. As mentioned earlier, the JVM is, itself, an extermely complex application. With this complexity, comes with a corresponding increase in memory footprint. Additionally, Java's core runtime library has grown quite large over its lifetime which adds to the application's footprint.With the advent of Project Jigsaw and Java 9, the Java SDK received two new tools to help trim the core memory footprint: jdeps, and jlink.

With Java 9, we can reduce the class memory footprint to include only those classes necessary for the application. While this approach does help reduce the overall memory footprint, it does nothing to reduce the memory overhead of the rest of the Java Runtime.

As we saw in the preceding sections, there is a limit to what we can accomplish with a conventional JVM. Fortunately, there is another option.

Introducing The GraalVM

In the days before Sun Microsystems acquisition by Oracle, Sun's research and development branch ( Sun Labs) started the Maxine Virtual Machine Project in 2005 to explore strategies of VM development with modular design and code reuse as primary goals. This approach included writing the virtual machine using the Java language itself in a meta-circular style. After realizing that goal was overly ambitious, the team decided concentrate on developing a compiler and leverage the existing Hotspot VM. The project continued after Sun's acquisition and was initially released in May 2019 as the production-ready GraalVM (v19.0). Like the Maxine VM, GraalVM is a drop-in replacement for the JRE. Unlike the JRE, GraalVM contains five primary differences: GraalVM Compiler, Truffle Language Implementation Framework, LLVM runtime, Javascript runtime, and the GraalVM Native Image.

GraalVM Compiler

The GraalVM compiler is a dynamic just-in-time (JIT) compiler that compiles bytecode into machine code. It includes multiple optimization algorithms in response to its analysis of the code's structure and performance. For code using modern Java features like Streams or Lambdas, the compiler can realize better performance than traditional JREs. Low-level operations like garbage collection, memory allocation, and I/O will see more moderate increases.Truffle Language Implementation Framework

In addition to the GraalVM compiler, GraalVM also contains the Truffle Language Implementation Framework. The framework provides a code library for creating language implementations that run on the GraalVM.LLVM runtime

In keeping with the polyglot nature of the platform, GraalVm also includes an LLVM runtime that can execute any program which has been compiled into LLVM bitcode. LLVM support opens up support for C , C++, and many other languages to execute on the VM.Javascript Runtime

Also included is the GraalVM JavaScript runtime. This runtime provides a fully ECMAScript-compliant runtime to run JavaScript and Node.js applications on the GraalVM.Native Image (AOT)

We have seen that GraalVM provide support for a diverse set of languages in addition to Java, but we still haven't addressed the startup time and memory footprint issue. If you've read this far, your efforts are about to pay off. If the previous feature weren't enough, the GraalVM team includes Native Image support. GraalVM Native Image dispenses with the JVM completely and performs ahead-of-time (AOT) compilation to a standalone executable. The standalone executable includes all of the classes required by the application, including all library classes as well as runtime classes and statically linked native code. To accomplish this, GraalVM embeds the Substrate VM to provide thread scheduling, garbage collection, and other core runtime features. This approach delivers on our search for faster startup times and reduced memory footprint. How much faster? According to the GraalVM website, we get these infographics:

Those are the kind improvements we have been looking for!

Faster Startup

So how does native image achieve these improvements? To improve startup speed, the GraalVM accelerates starts by eliminating classloading. Each native image executable contains only the classes discovered during the image build process, already loaded and linked. This process can take some time, but it only has to happen once, at build-time. Another advantage of the AOT build is that the executable no longer needs to interpret application bytecode. We can eliminate the Just-In-Time Compiler(JIT) and interpreter phase of startup. Lastly, the build process can generate a largely initialized heap and absorb this cost during the build phase. When the native image application starts, its heap has already been created and is ready to run.Smaller memory footprint

By only packaging the classes required by the application, we can shrink the memory footprint the same way we did with jdeps and jlinkHowever, we can also eliminate all JVM metadata for those classes as well. We also no longer need a JVM performing all its dynamic optimizations. The native image can also eliminate all application profiling data, the JIT compiler, and its corresponding data structure since the code has already been compiled into native code.Native Image limitations

When we remove the JVM from the deployment equation, we must, unfortunately, make some sacrifices. The first casualty is Reflection. Any code that leverages Java's reflection facilities must provide additional configuration to the application to supplement the missing JVM. We also lose the ability to generate thread and heap dumps. We lose these capabilities in native images since we no longer have a JVM to connect via JVMTI, JMX, or wire Java Agents to.We also need to make application size concessions. Due to the SubstrateVM's SerialGC, it is recommended that native image applications be limited to small heap sizes due to the nature of serial garbage collectors.

The serial collector is usually adequate for most small applications, in particular those requiring heaps of up to approximately 100 megabytes on modern processors. The other collectors have additional overhead or complexity, which is the price for specialized behavior. If the application does not need the specialized behavior of an alternate collector, use the serial collector. One situation where the serial collector isn't expected to be the best choice is a large, heavily threaded application that runs on a machine with a large amount of memory and two or more processors.

We must also concede that the native image application will likely not be as efficient as its JVM counterpart. Without the benefit of the JIT compiler, we no longer have a mechanism for execution-time profiling and optimization.

Lastly, because several of Java's features are facilitated by the host JVM (e.g., reflection, dynamic class loading, classpath handling, dynamic proxies, and classpath resources), we need a mechanism to compensate for the work the JVM performs to handle these features. This mechanism comes in the form of additional native image configuration files.

Twitter

Facebook

Reddit

LinkedIn

Email