Photo by Frank McKenna on Unsplash.coms

Now that we have a basic understanding of microservices and a few patterns under our belt lets turn our attention to how we go about deploying our services.

Deploying a suite of microservices is substantially different than deploying a monolithic application. This difference is due in large part to the stand-alone nature and elastic scaling of these services. The complexity these two aspects add can make microservice-based architectures considerably more challenging to manage. Gone are the days of simply deploying a new WAR or EAR file to an application server.

To deal with this complexity, we introduce the service container concept.

A virtual machine is software that emulates the hardware of a computer system. It allows for multiple operating system instances to run simultaneously on the same hardware. The ability to run multiple OS instances is accomplished using a Hypervisor.

A Hypervisor (also known as a virtual machine monitor) executes virtual machines. There are three types of Hypervisor. One implemented in software (type 2), one as firmware (type 1), and one as hardware (type 0). Each virtual machine is referred to as a guest machine executing on the computer that runs the hypervisor, generally referred to as the host. Each guest operating system is presented with a virtual operating platform that mediates access to the underlying hardware resources. With virtual machines, all operating system resources are made available to their guest applications.

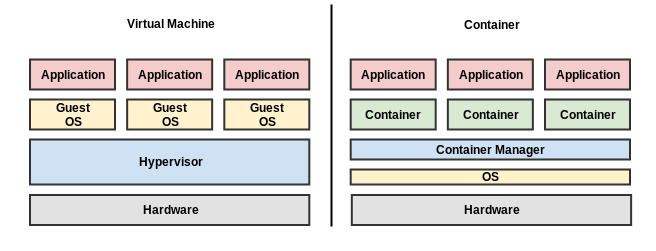

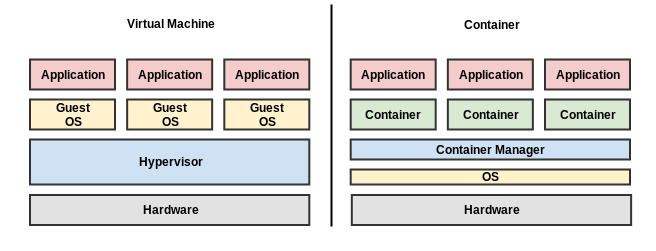

Unlike Hypervisor-based virtual machines which virtualize the underlying hardware, containers virtualize the operating system. With operating system virtualization, containers share the host OS kernel as well as binaries and libraries but run their applications in isolated user-space instances. Because containers share much of the underlying operating system, they have faster startup times and lower resource overhead than virtual machines making them an ideal deployment platform for microservices.

The ability to easily ship containerized services across multiple hosts avoids environment drift from development, testing, and production. Because each service is packaged with all its dependencies, the service will executes consistently in each environment because it is identical in each environment.

By leveraging an already running operating system, containers avoid the longer startup times common with virtual machines. Each service's memory footprint is also smaller because containers share so much of the operating system. With lower resource requirements, it is easier to support more services using containers then could be realized using a virtual machine approach.

Docker has been around since 2013 and is based on Linux Containers. It simplifies the process of building containers that can be easily shared and run unmodified on most public cloud platforms. Docker containers are just sandboxed environments running on a shared kernel, so they are very lightweight and have fast startup characteristics. Docker provides a container registry ( Docker Hub) that contains tens of thousands of public images and can also host private container images.

In addition to namespaces, Docker leverages a Linux kernel feature called Control Groups (cgroups) which isolates systems resources (memory, disk, network, and CPU) for a set of processes. CGroups allow Docker to manage how much of the underlying system resources can be used by a given container. In addition to providing container fine-tuning, CGroups prevents a single run-away container from taking over or crashing the entire system.

Now that we have wrapped our heads around the concept of containers, we are ready for the second half of the equation. In our next post, will introduce the concept of Microservice Container Orchestration to turn our individual services into a cohesive application.

Deploying a suite of microservices is substantially different than deploying a monolithic application. This difference is due in large part to the stand-alone nature and elastic scaling of these services. The complexity these two aspects add can make microservice-based architectures considerably more challenging to manage. Gone are the days of simply deploying a new WAR or EAR file to an application server.

To deal with this complexity, we introduce the service container concept.

Service Container

As if microservice architectures didn't already introduce enough added complexity, we now introduce container technology into the equation. Containers are an operating system level virtualization mechanism that allows multiple user-space instances to share the same OS kernel. A Service Container is simply a container packaged with a microservice and all its execution dependencies.Containers vs. Virtual Machine

It can be easy to confuse Containers with Virtual machines. On the surface, they both appear similar. Both provide a mechanism to deploy multiple, isolated services on the same hardware, but they accomplish this in radically different ways.

A virtual machine is software that emulates the hardware of a computer system. It allows for multiple operating system instances to run simultaneously on the same hardware. The ability to run multiple OS instances is accomplished using a Hypervisor.

A Hypervisor (also known as a virtual machine monitor) executes virtual machines. There are three types of Hypervisor. One implemented in software (type 2), one as firmware (type 1), and one as hardware (type 0). Each virtual machine is referred to as a guest machine executing on the computer that runs the hypervisor, generally referred to as the host. Each guest operating system is presented with a virtual operating platform that mediates access to the underlying hardware resources. With virtual machines, all operating system resources are made available to their guest applications.

Unlike Hypervisor-based virtual machines which virtualize the underlying hardware, containers virtualize the operating system. With operating system virtualization, containers share the host OS kernel as well as binaries and libraries but run their applications in isolated user-space instances. Because containers share much of the underlying operating system, they have faster startup times and lower resource overhead than virtual machines making them an ideal deployment platform for microservices.

Microservices + Containers = ideal match

Containers are a powerful complementary technology to microservices. Containers avoid the "Works on my Machine" by packaging up a working instance of the service (containerization), shipping it to its destination(s) and executing it without change. This portability allows crashed services to be quickly redeployed as well as replicated on different machines.The ability to easily ship containerized services across multiple hosts avoids environment drift from development, testing, and production. Because each service is packaged with all its dependencies, the service will executes consistently in each environment because it is identical in each environment.

By leveraging an already running operating system, containers avoid the longer startup times common with virtual machines. Each service's memory footprint is also smaller because containers share so much of the operating system. With lower resource requirements, it is easier to support more services using containers then could be realized using a virtual machine approach.

Container Runtimes

So far, we have been discussing containers at a conceptual level. However, before we can containerize our first service, we have to choose a container runtime for the containers to execute in. The following list illustrates a sampling of the breadth of options from which we have to choose.- Docker- is the most mature of the group, having brought containerization to the masses. While Docker has seen its usage shrink to 83% of the market (2018), it is still the most common container runtime.

- CoreOS rkt- has helped erode Docker's market share by taking 12% of the market (2018). This feat has been accomplished in large part due to rkt's out-of-box support for Kubernetes container orchestration (which we will introduce in the next article.

- Mesos Containerizer- from Apache has 4% of the market (2018) and has made significant inroads due primarily to its use in the Big Data application space.

- containerd- is a daemon for Linux that can manage the entire container lifecycle. It is expected to graduate from the Cloud Native Computing Foundation in early 2019. The project's stated goals are Simplicity, Robustness, and Portability.

- podman- is a container runtime that uses an alternative approach for creating containers. Instead of using a Client/Server model common with Docker and many of its peers, Podman uses a traditional fork/exec process. By eliminating the daemon, Podman reduces overhead and adds increases container security. Its Pods work the same as Kubernetes pods which simplifies the transition between technologies. Podman is one to watch as it has been engineered to be a drop-in replacement for Docker.

The safe bet

Since we are just starting to explore the container space, we will begin with Docker. While the other containers have interesting attributes, Docker is still the leader in the enterprise market, making it the obvious choice.Docker

Docker has been around since 2013 and is based on Linux Containers. It simplifies the process of building containers that can be easily shared and run unmodified on most public cloud platforms. Docker containers are just sandboxed environments running on a shared kernel, so they are very lightweight and have fast startup characteristics. Docker provides a container registry ( Docker Hub) that contains tens of thousands of public images and can also host private container images.

Docker Components

Docker is compose of the following components:

Docker Image

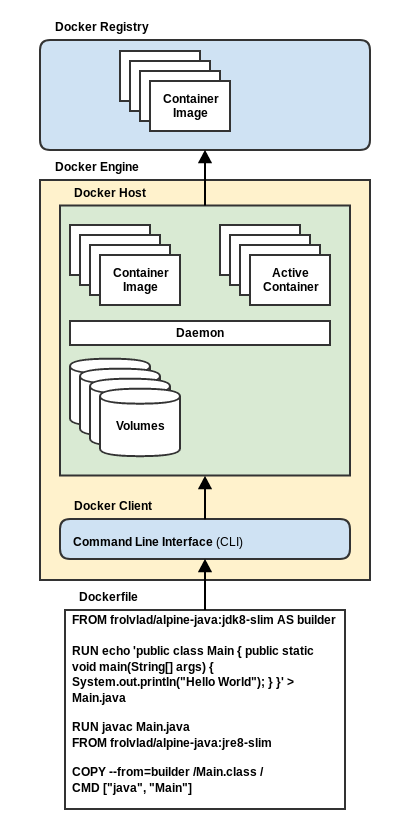

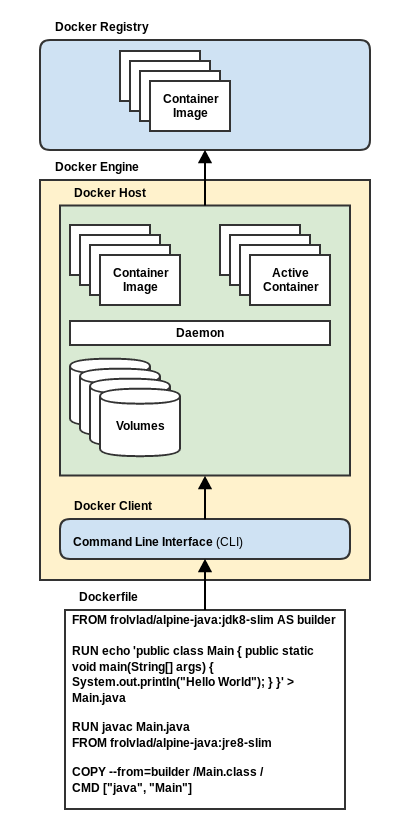

A Docker Image is a read-only template that is executed by a Docker engine to create a specific container image. Images allow the application to be stored, shipped, and executed. Every container image is built from a set of instructions declared in a DockerFile. Every instruction found in the Dockerfile generates a new layer in the image using a Union Filesystem. Docker uses the Union Filesystem approach to combines each layer into a complete container image. The layered approach allows the container developer to compose the images to add new functionality to the image or override previously declared functionality. This approach simplifies development by extending base images, and accellerates container changes by only rebuilding the layers that have changed.Dockerfile

The Dockerfile is the collection of instructions used to build a particular container image. In the illustration above, the Dockerfile starts with a base image that includes a pared-down image of Java 8. It then creates a simple Java class, compiles it and runs it.Docker Registry

A Docker Registry provides a content delivery system for both public and private Docker images. Docker clients connect to registries to upload (push) and download (pull) images. The Docker repository provides the central hub from which container images are managed.Docker Engine

The Docker Engine provides a lightweight runtime and the neccesary tooling responsible for building containers images, and managing containers. It is composed of three parts:- Daemon-The daemon is a server process that runs natively on Linux systems and exposes a REST API endpoint to control the Docker engine.

- REST API-This API provides a communication channel over which the Docker daemon is controlled.

- Command-Line Interface (CLI)-This command-line interface communicates with the Docker Daemon's REST API to control the Daemon.

Docker Volumes

Every Docker container file system is ephemeral. When the container terminates, the filesystem disappears. Docker Volumes provide long-lived filesystems that allow containers to persist data outside of the container's filesystem. This mechanism allows volumes to continue to exist even when the container is rebuilt, updated, or destroyed.Docker Containers

Docker containers are the realized instance of a Docker image. Each container provides an isolated view of the underlying operating system. To accomplish this, Docker creates namespaced instances for several elements in the operating system, including:- PID- This namespace allows the container to isolate the processes that are executing within it. This namespace allows the runtime to segregate each containers process and control their visibility.

- USER- This isolates users & groups to a particular container from the underlying system. This namespace allows each container to have a unique set of users and groups.

- IPC- This isolates Inter-Process Communication resources executing within the container.

- UTS- This namespace allows each container to have unique system identifiers (e.g., hostname) from the underlying operating system

- MNT- Allows each container to have its own view of the mounted elements of the filesystem.

- NET- This provides each container with its own network devices, IP addresses, and ports. By providing each container with its on network support, a single container runtime can appear as multiple machines to the network.

In addition to namespaces, Docker leverages a Linux kernel feature called Control Groups (cgroups) which isolates systems resources (memory, disk, network, and CPU) for a set of processes. CGroups allow Docker to manage how much of the underlying system resources can be used by a given container. In addition to providing container fine-tuning, CGroups prevents a single run-away container from taking over or crashing the entire system.

Microservice and Containers

Now that we have a cursory understanding of containers let look at how we can use containers with our microservice architecture. Using containers allows us to package all of a service's dependencies into a single image. This image gives us the freedom to ship the service to whatever location we choose with the confidence that it will execute correctly. Once a service has been containerized, it is easier to scale by simply deploying the container multiple times. Each replica behaves identically. With containers, we can isolate (via CGroups) the service's execution environment. This isolation can prevent any service from dominating its host's resources.Summary

In this article, we have seen how containers work and how they can complement microservices. We have seen that several container runtimes are available and that with the help of the Open Container Initiative, we should soon see container portability between runtimes in the near future. We have introduced Docker, the leading container runtime and discussed its primary components.Coming Up

In future articles, we will dive deeper into the details of how to use build and use containers. In the meantime, I will refer the impatient reader to the official Docker - Getting Started site for a more in-depth discussion.Now that we have wrapped our heads around the concept of containers, we are ready for the second half of the equation. In our next post, will introduce the concept of Microservice Container Orchestration to turn our individual services into a cohesive application.

Twitter

Facebook

Reddit

LinkedIn

Email