If at first you dont succeed...

Failure happens. One of the key aspects of any microservice architecture is inter-service communication. Once we move away from a monolithic architecture executing in a single process to many independent services running in separate processes, we become dependent on a network to link services together.

Unfortunately, both the dependent service and the network can periodically fail. Services can fail for many reasons. Defects in code, Null-Pointer exceptions, Out of Memory errors can all crash a service. In addition to that, the environment in which these process run (e.g., application server, OS containers, virtual machines, etc.) can all crash. Hardware failures also render services unavailable. Host servers die, as do network switches and routers.

In microservice applications with elastic scaling, services are started and stopped in response to load. If a call is made to service transitioning between these states, the request may fail. Rather than simply fail and propagate an exception up to the caller, we look for a way to potentially mitigate the failure.

The retry pattern

As the name implies, this pattern will trap the exception and attempt to retry the service after a failure occurs. While the retry pattern is a simple idea in principle, there are several strategies that can be employed depending on the failure type.

Immediate Retry

This strategy is the simplest. The calling service catches the failure and immediately re-invokes the service call again. This strategy can be useful for unusual failures that occur infrequently. When this class of failure occurs, the chance of success is high by merely trying the call again.

Retry after delay

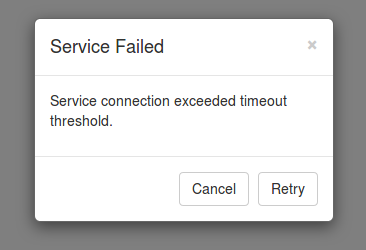

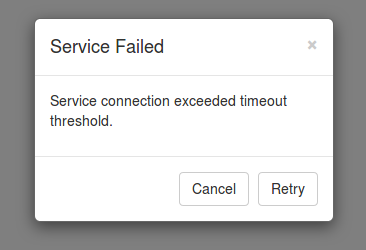

In this strategy, we introduce a delay in the hope that the root cause of the fault has been rectified.

Retry after delay is an appropriate strategy when a services timeout occurs due to busy failures or there has been a networking-related issue.

Sliding Retry

In this strategy, the service will continue to retry the service adding incremental time-delays on each subsequent attempt. For example, the first retry may wait 200ms, the second will wait 400ms, the third will wait 600ms until the retry count has been exceeded. By adding an increasing delay, we reduce the number of retries to the service and avoid adding additional load to a potentially already overloaded service.

Retry with Exponential Backoff

In this strategy, we take the Sliding Retry strategy and ramp up the retry delay exponentially. If we started with a 200ms delay, we would retry again after 400ms, then 800ms. Again, we are trying to give the service more time to recover before we try to invoke it again.

Abort

There comes a time when we need to throw in the towel. At some point, the cost of multiple retries in terms of response time will exceed an acceptable threshold. When this occurs, our best strategy is to abort the retry process and let the error propagate to the calling service.

Summary

Failure is only a failure when we decide it is! The retry pattern allows the calling service to retry failed attempts with the hope that the service will respond within an acceptable window of time. By varying the interval between retries we provide the dependent service with more time to respond. Of course, at some point we just cant wait for a service any longer and we must acknowledge that the service isn't responding. We abort the retry process and notify the caller of the error.

Coming up

In our next post, we will take a look at a pattern that helps us deal with overloaded services. The

Circuit Breaker Pattern.

Twitter

Facebook

Reddit

LinkedIn

Email