Historically when we wanted to increase our ability to service more users or store more data, the first choice was

Vertical Scaling- also known as "get a bigger server." Unfortunately there comes a time when that either becomes financially impractical or there is no longer a path to a more powerful machine. When that happens, the only option is to scale horizontally. As we begin the process of horizontally re-engineering the application, we must contend with how to manage three traits:

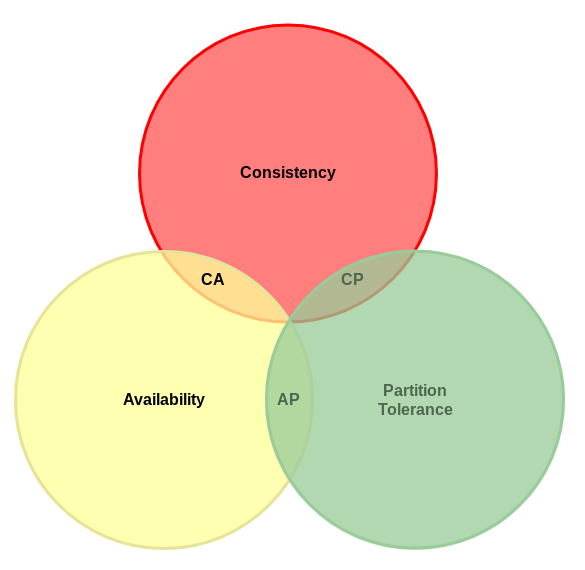

Consistency, Availability, and Partition Tolerance.

Transaction Management is required to maintain consistency during the execution of the transaction (and during any rollback that may occur). A Transaction Manager is used to manage contention between writes to the participating tables or rows. During the transaction window, the Transaction Manager locks the participating tables or rows to prevent access by other clients. As we have seen in previous articles, these locks create contention on the resources (tables or rows) and limit their availability during the transaction. By locking the data, the transaction manager prevents other actors from making changes to the tables or rows participating in the transaction. Here the Transaction Manager is prioritizing consistency over availability. We may choose to use row locks to minimize the contention since row locks have a smaller scope than table locks, but we are still affecting availability.

The overhead incurred by the Transaction Manager is necessary to achieve high consistency and is usually a manageable compromise in a single node system. However, to achieve this level of consistency in a distributed system requires transaction management spanning all participating nodes for a given system. A distributed transaction manager would need to distribute all state changes and achieve consensus across all participating nodes before the transaction could be considered complete. The same effort would also be required when performing rollback operations.

As all of these operations are executing across a network of multiple nodes the communication overhead and failure management requirements make this an impractical solution. In distributed systems, strong consistency is often relaxed, and we embrace an Eventually Consistent model.

Consistency

Consistency is the ability for the system to read from any replicated service instance, and always return the most recent write made across replicated services of the same type.

Availability

Availability is the ability for a service to execute a request and receive a response within a reasonable time interval without timing-out or throwing an error. The definition of reasonable is of course application specific.Partition Tolerance

A network partition occurs when one or more of the application's services become unreachable. Partition Tolerance is the application's ability to continue to function in the event a network partition occurs.

CAP Theorem

CAP Theorem was first suggested by Eric Brewer in 1998 and described the relationship between Consistency, Availability and Partition Tolerance in distributed systems. The theorem is predicated on the fact that within distributed systems, network partitions are a fact of life and must be factored into the application's design.

CAP theorem states that when a network partition ocurrs, a choice must be made between Consistency

or Availability. In the absence of a network partition, both Consistency

and Availability can be satisfied.

Dealing with Network Partitions

Once we accept that network partition will occur in a distributed application, we must choose which aspect of the application behavior we are willing to sacrifice and to what degree:- Sacrifice Consistency - (maintain Availability and Partition tolerance). In this scenario we allow writes on both sides of the partition. When the partition has been resolved, both sides of the partition need to merge their data to return to a consistent state.

- Sacrifice Availability - (maintain Consistency and Partition tolerance) In this scenario, after a partition has occurred, one side of the partition is disabled to prevent inconsistency. The remaining partition remains consistent, and the application continues with a lower degree of availability.

Strong Consistency

Traditional Databases provide consistency through ACID transaction semantics. Every statement within a transaction must complete, or the transaction fails, and the executed statements are rolled back.Transaction Management is required to maintain consistency during the execution of the transaction (and during any rollback that may occur). A Transaction Manager is used to manage contention between writes to the participating tables or rows. During the transaction window, the Transaction Manager locks the participating tables or rows to prevent access by other clients. As we have seen in previous articles, these locks create contention on the resources (tables or rows) and limit their availability during the transaction. By locking the data, the transaction manager prevents other actors from making changes to the tables or rows participating in the transaction. Here the Transaction Manager is prioritizing consistency over availability. We may choose to use row locks to minimize the contention since row locks have a smaller scope than table locks, but we are still affecting availability.

The overhead incurred by the Transaction Manager is necessary to achieve high consistency and is usually a manageable compromise in a single node system. However, to achieve this level of consistency in a distributed system requires transaction management spanning all participating nodes for a given system. A distributed transaction manager would need to distribute all state changes and achieve consensus across all participating nodes before the transaction could be considered complete. The same effort would also be required when performing rollback operations.

As all of these operations are executing across a network of multiple nodes the communication overhead and failure management requirements make this an impractical solution. In distributed systems, strong consistency is often relaxed, and we embrace an Eventually Consistent model.

Twitter

Facebook

Reddit

LinkedIn

Email